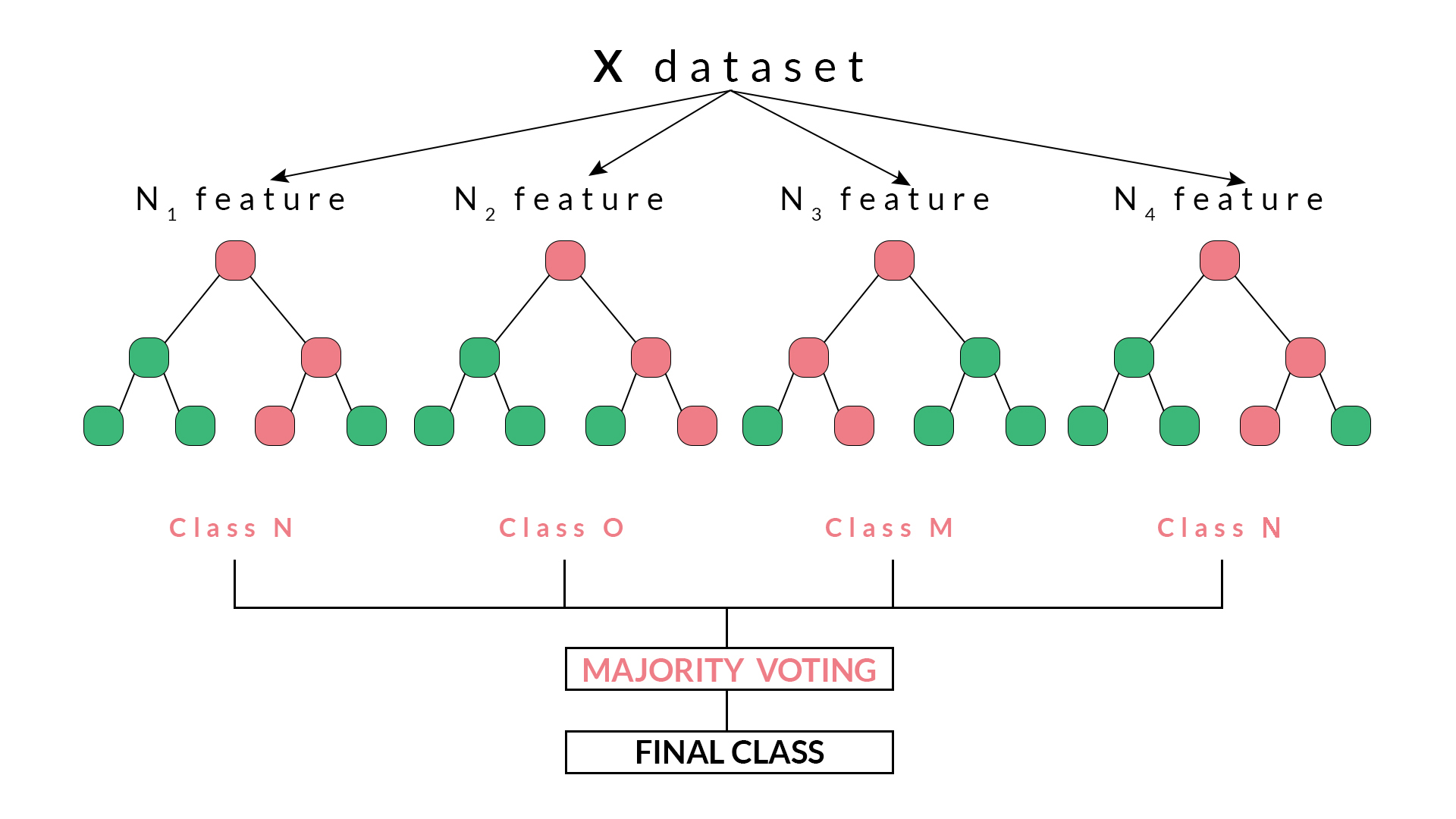

Random Forest Regression Example | In random forests, all the base models are constructed independently using a different subsample of the data. Shortcomings of decision trees 4. Random forest is a type of supervised machine learning algorithm based on ensemble learning. Random forest is a bagging technique and not a boosting technique. Along with its implementation in python.

Regression examples random forest regression is one of the fastest machine learning algorithms giving accurate predictions for regression problems. Print(fitting random forest regression on training set) #. Random forest regression works on a principle that says a number of weakly predicted estimators when combined together form a. Let us see understand this concept with an example, consider the salaries of employees and their experience in years. Example of gini impurity 3.

Shortcomings of decision trees 4. When there are both negative and positive vimp values, the plot.gg_vimp. Gridsearchcv with random forest regression. The trees in random forests are run in parallel. Random forest is a bagging technique and not a boosting technique. I am trying to use a random forest model (regression type) as a substitute of logistic regression model. Fitting the regression model to the dataset regressor = randomforestregressor(n_estimators = 100, random_state = 50) regressor.fit(x_train, y_train.ravel()) # using ravel() to avoid getting 'dataconversionwarning' warning. Random forest is a type of supervised machine learning algorithm based on ensemble learning. Regression examples random forest regression is one of the fastest machine learning algorithms giving accurate predictions for regression problems. >>> from sklearn.ensemble import randomforestregressor >>> from the predicted regression target of an input sample is computed as the mean predicted regression. The algorithm operates by constructing a multitude of decision trees at training time and outputting the mean/mode of prediction of the individual trees. Use random forest regression to model your operations. Example of gini impurity 3.

A complete code example is provided. Implementing random forest regression in python. Print(fitting random forest regression on training set) #. Random forests or random decision forests are an ensemble learning method for classification. A regression model on this data can help in predicting the.

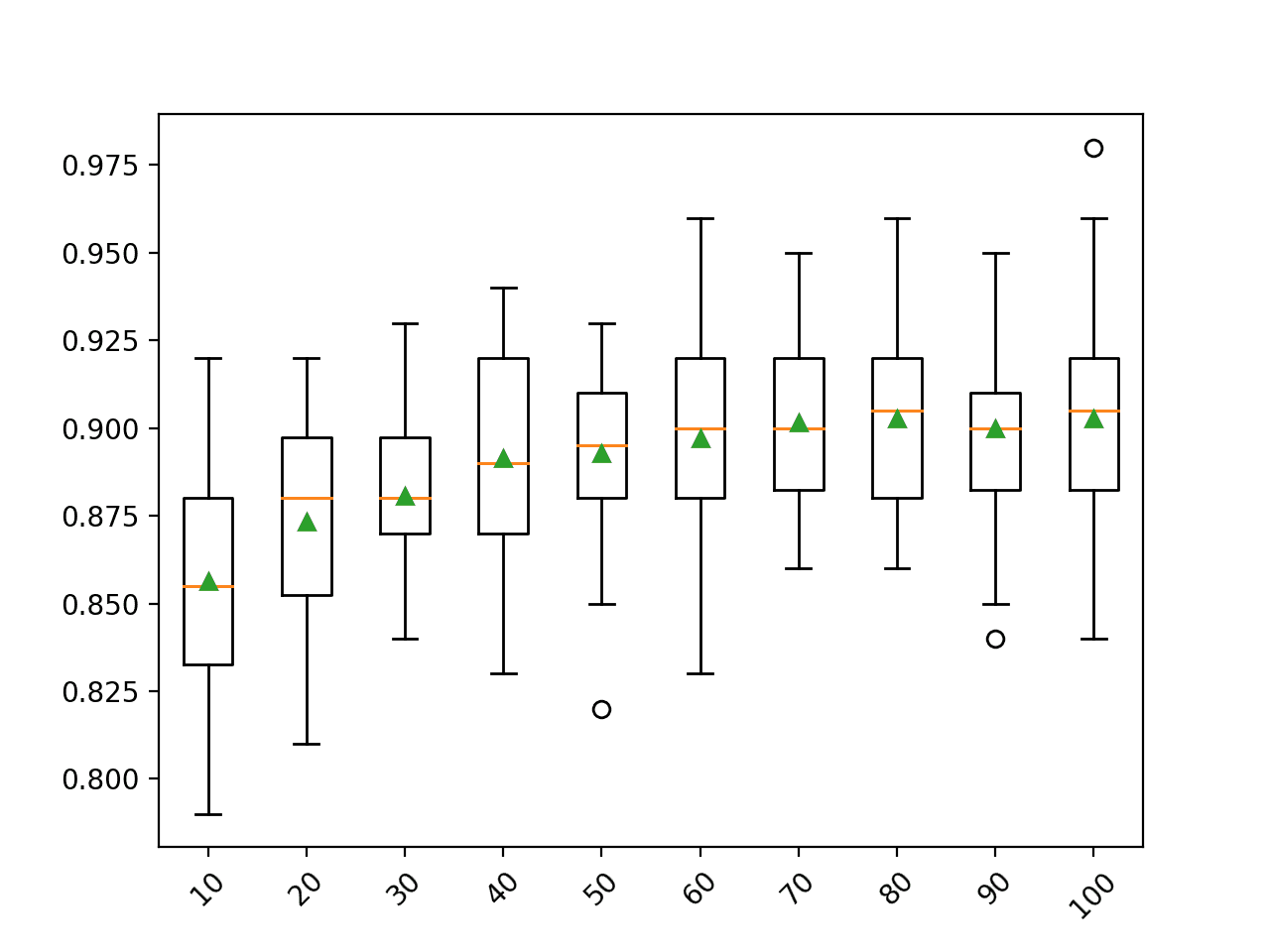

Then you will fit the gridsearchcv to the x_train variables and the x_train label. Regression and classification settings emulate the formula specifications of the randomforest. For example, see these nine decision tree classifiers below Random forest is the best algorithm after the decision trees.in this tutorial of how to, know how to improve the accuracy of random forest classifier. Along with its implementation in python. Random forests or random decision forests are an ensemble learning method for classification. Suppose, fore example, that we have the number of points scored by a set of basketball players and. Comparison of random forest and extreme gradient boosting project. Random forest regression works on a principle that says a number of weakly predicted estimators when combined together form a. Regression examples random forest regression is one of the fastest machine learning algorithms giving accurate predictions for regression problems. The algorithm operates by constructing a multitude of decision trees at training time and outputting the mean/mode of prediction of the individual trees. Suppose i have a population of 100 employees. For example, you can input your investment data (advertisement, sales materials, cost of.

Random forest is the best algorithm after the decision trees.in this tutorial of how to, know how to improve the accuracy of random forest classifier. Use random forest regression to model your operations. Regression and classification settings emulate the formula specifications of the randomforest. In this example, all vimp measures are positive, though some are small. Random forest is an ensemble machine learning technique capable of performing both regression and classification tasks using multiple decision trees in other words, random forest builds multiple decision trees and merge their predictions together to get a more accurate and stable prediction rather.

8 random forests for regression. Random forest is a bagging technique and not a boosting technique. Then you will fit the gridsearchcv to the x_train variables and the x_train label. The final value can be calculated by taking the average of all the values predicted by all the trees in. Fitting the regression model to the dataset regressor = randomforestregressor(n_estimators = 100, random_state = 50) regressor.fit(x_train, y_train.ravel()) # using ravel() to avoid getting 'dataconversionwarning' warning. The trees in random forests are run in parallel. I am trying to use a random forest model (regression type) as a substitute of logistic regression model. Shortcomings of decision trees 4. I want to understand the meaning of importance of variables (%incmse and incnodepurity) by example. Example of gini impurity 3. A complete code example is provided. Suppose, fore example, that we have the number of points scored by a set of basketball players and. The first question is how a regression tree works.

Example of gini impurity 3 random forest regression. Use random forest regression to model your operations.

Random Forest Regression Example: In case of a regression problem, for a new record, each tree in the forest predicts a value for y (output).

Fonte: Random Forest Regression Example

comment 0 Comments

more_vert